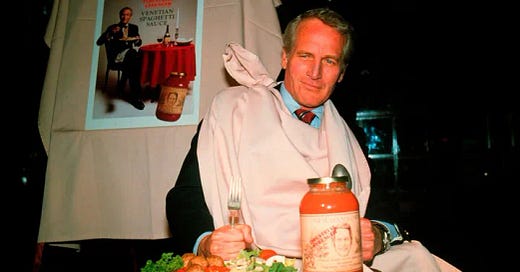

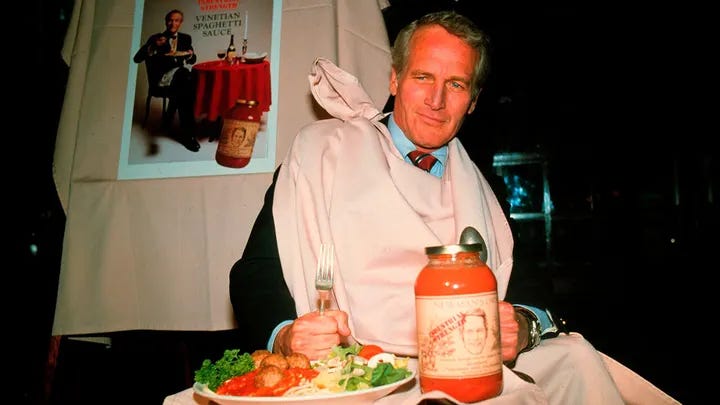

Paul Newman said, “To be an actor you have to be a child.” Maybe that’s true. But to build an iconic brand selling salad dressing—and actually succeed—requires something more elusive: taste.

Taste is that subtle sense for what works—not just technically, but contextually. It’s intuitive, difficult to articulate, yet instantly recognizable. Newman’s success wasn’t because his dressing was objectively better measured by precise recipes. He succeeded because he intuitively understood what people wanted—even if they themselves couldn’t explain exactly what it was.

AI faces exactly the same challenge. We’ve become conditioned to evaluate AI primarily through narrow lenses: accuracy metrics, precision, unit tests. But technical correctness alone rarely produces results that feel genuinely right. AI outputs can pass every conceivable benchmark and still fall flat in real-life contexts, precisely because they lack taste—the subtle sense for context and implicit human preferences.

The Taste Problem

Most people struggle to clearly express what they prefer. Ask someone why one shirt feels stylish, why one salad dressing tastes better, or why one code snippet seems “cleaner,” and you’ll get vague, intuitive answers. These judgments aren’t random; they’re deeply contextual. People recognize when something feels right, even if they can’t pinpoint exactly why.

Even Code Isn’t Immune

Consider merging two user data from two sources:

Version A: Technically Correct, Contextually Blind

def merge_data(source1, source2):

merged = {}

for item in source1:

merged[item["user_id"]] = item

for item in source2:

merged[item["user_id"]] = item

return list(merged.values())This solution is objectively correct, passing unit tests without issue. But it totally disregards common real-world scenarios: what happens when two entries conflict? Whose data do you trust more? Without addressing these preferences explicitly, correctness means little.

Version B: Technically Correct, Contextually Awareness

def merge_data_entries(entry_a, entry_b):

# Explicitly prefer entry_b for conflicts

return {

"user_id": entry_a["user_id"],

"name": entry_b.get("name", entry_a["name"]),

"email": entry_b.get("email", entry_a["email"])

}

def merge_data(source1, source2):

merged = {}

for item in source1:

merged[item["user_id"]] = item

for item in source2:

if item["user_id"] in merged:

merged[item["user_id"]] = merge_data_entries(merged[item["user_id"]], item)

else:

merged[item["user_id"]] = item

return list(merged.values())

Now we have something better—not just technically correct, but explicitly addressing real-world complexity. This second solution demonstrates taste because it aligns with implicit human preferences and business logic. It doesn’t merely “work”; it works the way people actually want it to.

Why Great Evaluation Requires Context

If you want great results from AI, you need great evaluation. But great evaluation isn’t about more stringent metrics or more thorough unit tests. It’s about explicitly acknowledging the complexity and context of the real world. Without capturing context and preference—without embedding taste in evaluations—we remain stuck at surface-level correctness.

Context, however, is subtle. Preferences are rarely explicit. Humans often don’t even know precisely why they prefer one approach over another—they simply recognize something is right. Most evaluation frameworks overlook this subtlety entirely, opting instead for metrics that are easy to measure yet fundamentally inadequate.

Learning Taste, Teaching AI

Taste represents that intangible, difficult-to-define quality that separates something merely correct from something genuinely valuable. Technical correctness is the floor, not the ceiling. In every creative endeavor—whether clothing, food, or AI—the ultimate goal is creating something intuitive, effortless, and “just right.”

Just as Paul Newman learned taste by experimenting and trusting intuition, AI can similarly develop taste—provided evaluation is designed directly into real-world workflows. Instead of passively relying on isolated human judgment, evaluation must happen continuously and contextually. By embedding AI in the everyday workflows where decisions naturally occur, subtle human preferences, implicit trade-offs, and intuitive judgments become visible and learnable.

Over time, this continuous interaction pushes AI applications beyond mere correctness. They begin delivering outcomes that feel fundamentally aligned with human expectations, capturing the essence of something valuable.

In other words, good taste doesn’t just matter - it’s essential.

I couldn't agree more. Currently, AI still struggles to directly generate production code, especially when business logic is complex—careful review is essential, otherwise it may misinterpret your requirements. Whenever I hear people claim they can build a product with AI in just minutes or hours, I immediately know it’s nothing more than a toy.